The Future of Software: From Code to AI Agents

In this article, we break down Andrej Karpathy’s insights from his talk at YC AI Startup School, exploring how Large Language Models (LLMs) and AI agents are reshaping software development.

The software industry is undergoing a massive transformation that’s unlike anything we’ve seen in the last 70 years. What used to be limited to traditional human-written code is now evolving into AI-driven applications that not only process data but also think, learn, and adapt in real-time. Andrej Karpathy's recent talk at YC AI Startup School outlined the key shifts that are happening right now, with a strong focus on Software 3.0—the era where Large Language Models (LLMs) and AI agents become the new driving force of software development.

Here’s a breakdown of the key themes from the talk and the opportunities they present.

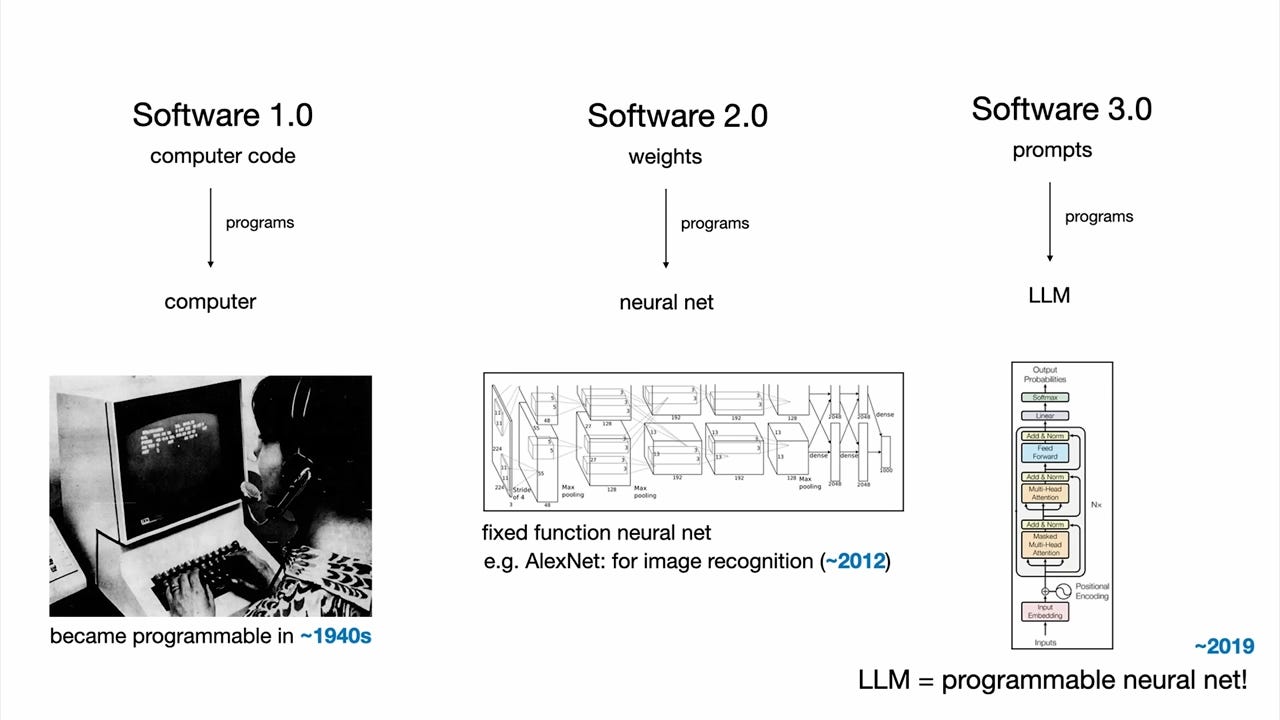

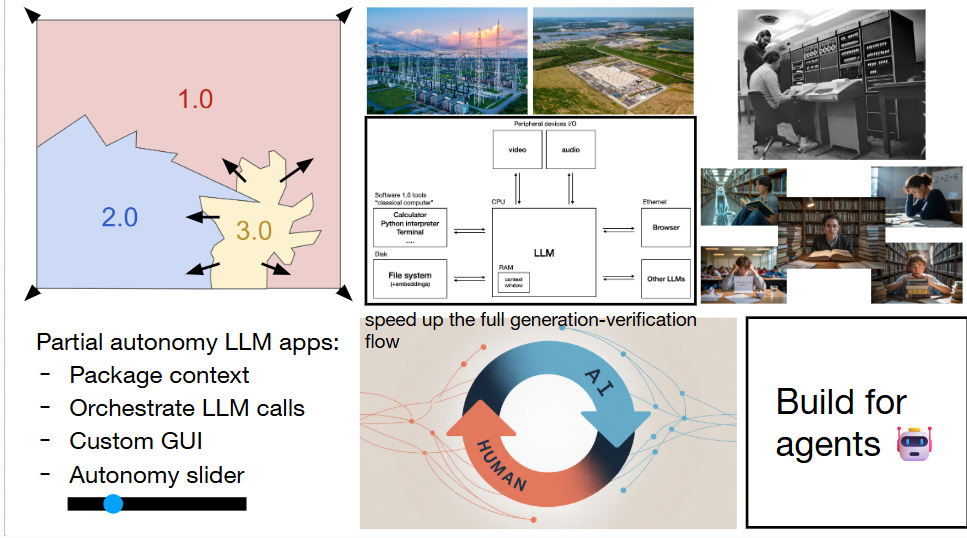

1. The Evolution of Software: Software 1.0, 2.0, and 3.0

The evolution of software has seen three distinct phases:

Software 1.0: This is the traditional era of programming where humans wrote every line of code manually (e.g., in languages like C++, Java).

Software 2.0: Enter neural networks and machine learning models. In this phase, the "code" is replaced with weights and datasets. AI systems learn from data, which allows them to perform tasks that would be too complex for hard-coded systems. Think of Tesla’s Autopilot—it’s a neural network, not a traditional code stack.

Software 3.0: This is the frontier we’re currently stepping into, where software is driven by natural language prompts. In this phase, Large Language Models (LLMs) like GPT-4 are programmed via simple language commands, making the process of software development drastically different. You’re no longer writing lines of code; you're interacting with your software by telling it what to do, often in English or another human language.

The rise of Software 3.0 is a monumental shift where LLMs serve as the new "code," and this development is reshaping industries and the way we think about programming.

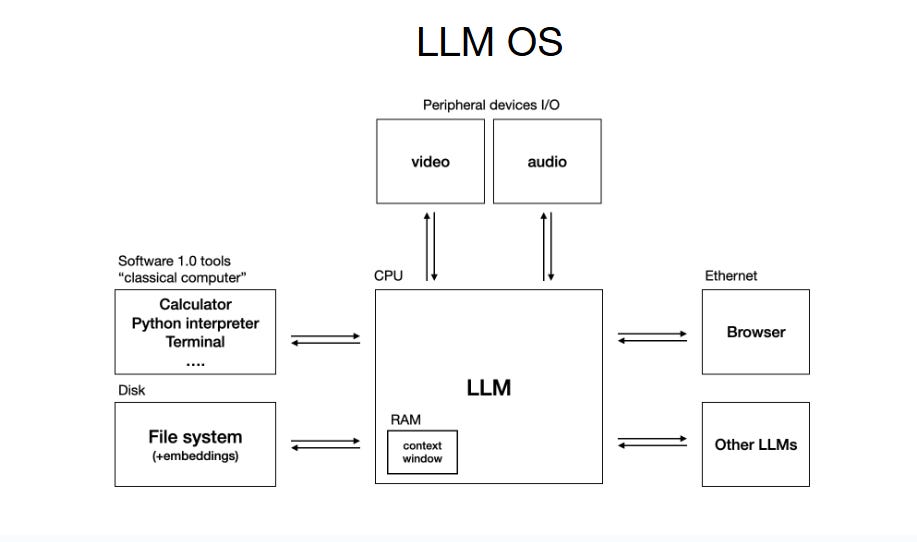

2. LLMs: Utilities, Fabs, and Operating Systems

Karpathy discussed the importance of LLMs by drawing analogies to familiar infrastructures:

LLMs as Utilities: Similar to electricity, training an LLM requires massive capital expenditures (CAPEX) and operational costs (OPEX) to provide AI as a service. The downtime of such services feels like an “intelligence brownout” – a lack of AI’s operational presence.

LLMs as Fabs: Like semiconductor fabrication plants (fabs), LLMs require huge upfront investments in hardware and R&D to build and maintain. But unlike fabs, LLMs are software-based and can be reproduced and distributed easily.

LLMs as Operating Systems: Much like an operating system (OS), LLMs are complex ecosystems that manage interactions with other software and data. The landscape for LLMs is similar to how OS platforms were once fragmented with closed-source and open-source systems (e.g., Windows vs. Linux). Today, we have closed providers like OpenAI and emerging open-source alternatives like Llama.

LLMs are becoming the backbone of future software—more like utilities and operating systems than just “models.”

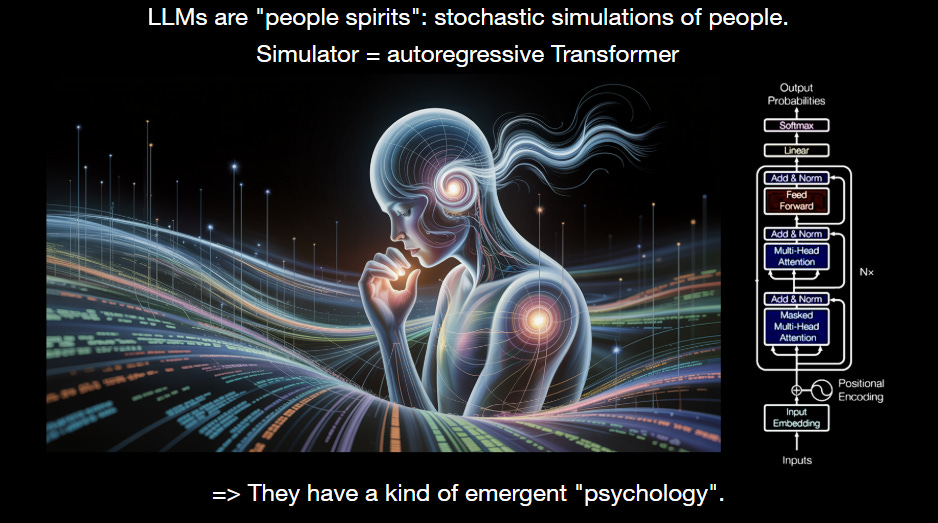

3. LLM Psychology: Superpowers and Cognitive Deficits

Karpathy went deeper into the “psychology” of LLMs, comparing them to human cognition:

Superpowers: LLMs possess encyclopedic knowledge, far surpassing a single human’s capacity. They can retrieve information on a massive scale.

Cognitive Deficits: Despite their superhuman capabilities, LLMs exhibit several cognitive flaws:

Hallucinations: LLMs may fabricate facts that aren't true.

Jagged Intelligence: While LLMs excel in certain tasks, they often make errors no human would (e.g., spelling mistakes in simple words).

Anterograde Amnesia: LLMs don't remember things over time—they start fresh with every interaction.

Gullibility: LLMs are prone to accepting incorrect or manipulated prompts and may leak private data.

LLMs are essentially “stochastic simulations of people,” with great strengths and significant limitations. Understanding both is crucial for working effectively with these models.

4. Building Partial Autonomy Apps and Human-AI Collaboration

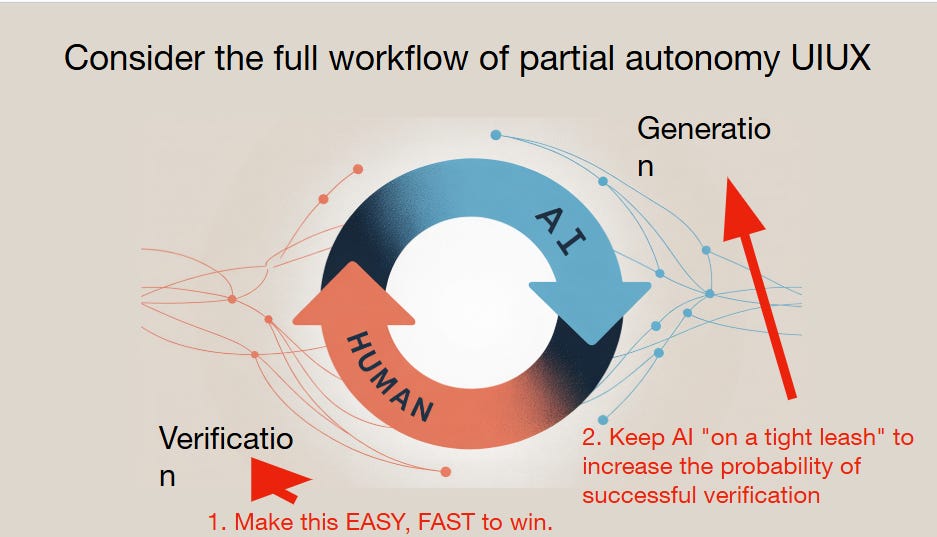

One of the most exciting opportunities lies in creating partial autonomy apps, where AI performs tasks but humans stay in the loop for verification and oversight. This could include applications where LLMs generate responses, but the final output is still verified by humans to ensure accuracy and relevance.

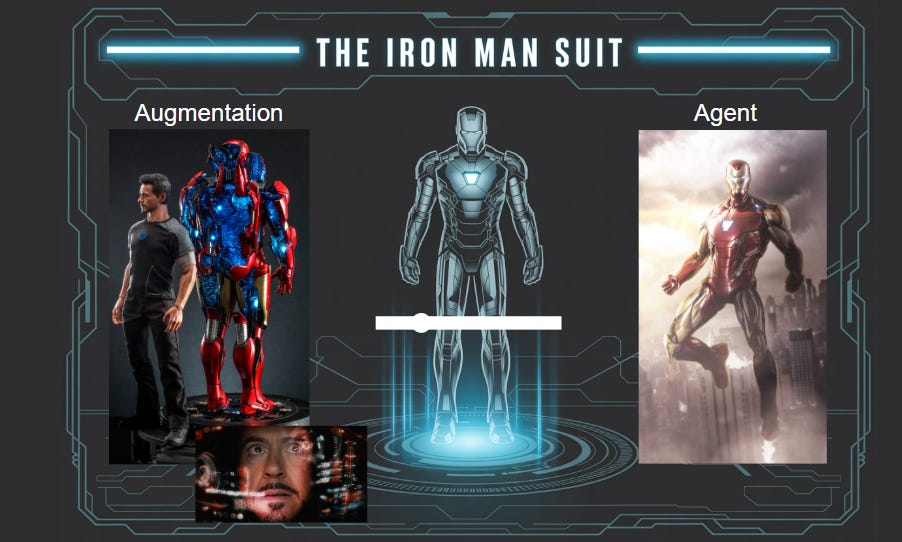

Autonomy Slider: Karpathy suggested introducing an autonomy slider in apps that allows users to adjust the level of AI involvement, from providing basic suggestions to handling complex tasks independently.

Human-AI Collaboration: The ideal AI-powered app will facilitate the “generation-verification loop.” AI generates, humans verify. This allows for faster, more efficient workflows without compromising accuracy or control.

Iron Man Suit Analogy: Karpathy emphasized the need to augment human capabilities, not replace them entirely. Think of building augmentations—tools and interfaces that help humans perform tasks more efficiently, but with the option to intervene whenever necessary.

The future isn’t about fully autonomous agents; it’s about human-AI collaboration, with the autonomy slider allowing humans to choose how much control they want to give to the AI.

5. Vibe Coding: The Democratization of Programming

"Vibe coding" is a new concept that allows anyone to create software, regardless of their technical knowledge, by simply using natural language to communicate with an AI. Karpathy illustrated this with the example of MenuGen, an app he built in just hours with the help of LLMs, but it took a full week to set up real-world integrations like authentication, payment systems, and deployment.

Key Challenge: While the AI makes the coding part easy, devops, authentication, and deployment remain tedious manual processes. This reveals a gap where AI can help, but human effort is still needed to “make it real.”

Vibe coding is a game-changer for software accessibility, but the real-world implementation still requires significant manual intervention in non-coding tasks.

6. Building Software for Agents: Making it LLM-Friendly

Karpathy introduced the idea of agents—AI systems that act on digital information like humans. To support these agents, the software infrastructure must be adapted to be more LLM-friendly.

LLM-Friendly Documentation: Moving beyond human-centric documentation, the focus should shift to making content more accessible for LLMs (e.g., Markdown).

Actionable Documentation: Instead of simple "click this" instructions, LLMs can execute equivalent API calls or curl commands directly, streamlining workflows.

As agents take on more active roles, we need to evolve our documentation and software infrastructures to be more LLM-friendly and reduce friction in AI-human interactions.

7. The Decade of Agents: Preparing for the Future of Software

We are entering the Decade of Agents—a time where AI agents will not only assist in tasks but will also take on more autonomous roles. This shift is akin to the evolution of the personal computing revolution that took place in the 1980s. The software of the future will likely include more AI-powered agents, transforming how we work and interact with technology.

The next decade will be about integrating AI agents into our software systems, where human augmentation slowly gives way to true autonomy in AI systems.

Conclusion: Why This Matters

The AI revolution is here, and it's reshaping industries across the globe. If you’re a software developer, product manager, or business leader, understanding how to integrate Software 3.0, LLMs, and partial autonomy into your products and services will be key to staying competitive in the next decade. As Karpathy pointed out, the next 10 years will be the "Decade of Agents", and there’s no better time to get involved.

Ready to Build the Future? Embrace the shift to AI-driven software. How will LLMs and AI agents change the way you work or develop new products?

References:

Talk by Andrej Karpathy at YC AI Startup School

Image Credits: Andrej Karpathy’s Talk Video

If you enjoyed this post and want to support my work on AI and machine learning, consider buying me a coffee. Your support helps me keep writing and sharing insights regularly.